Hackers Exploit Free Google Favicon Generator for Phishing

Adrien Gendre

—April 09, 2020

—2 min read

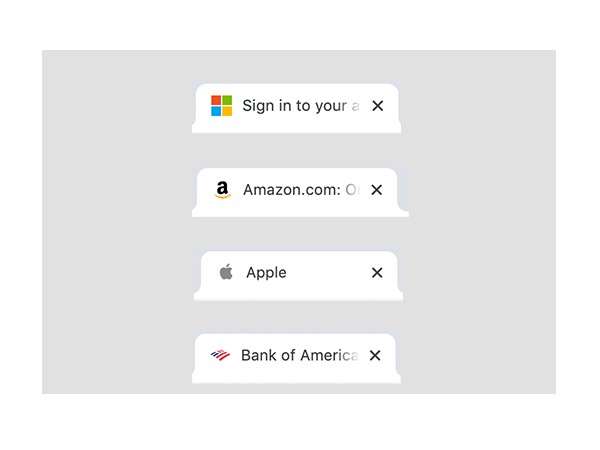

Favicons, small icons also known as website icons and shortcut icons, are displayed in browser tabs and provide a visual cue of the webpages you have open in your browser. Because most of us tend to visit the same websites on a daily basis, we can quickly glance at browser tab and instantly recognize a brand’s icon. If you saw one of these tabs in your browser, you’d probably never guess you were on a phishing page.

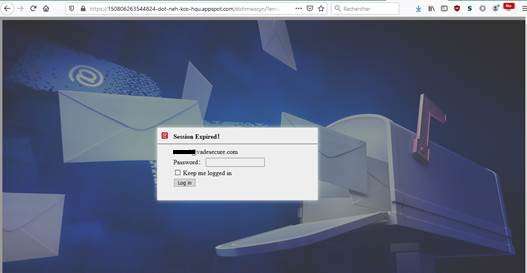

In February 2020, Vade detected a phishing campaign using a free favicon generator to pull a brand’s favicon into the tab of a phishing site, making the webpage look like a legitimate brand webpage.

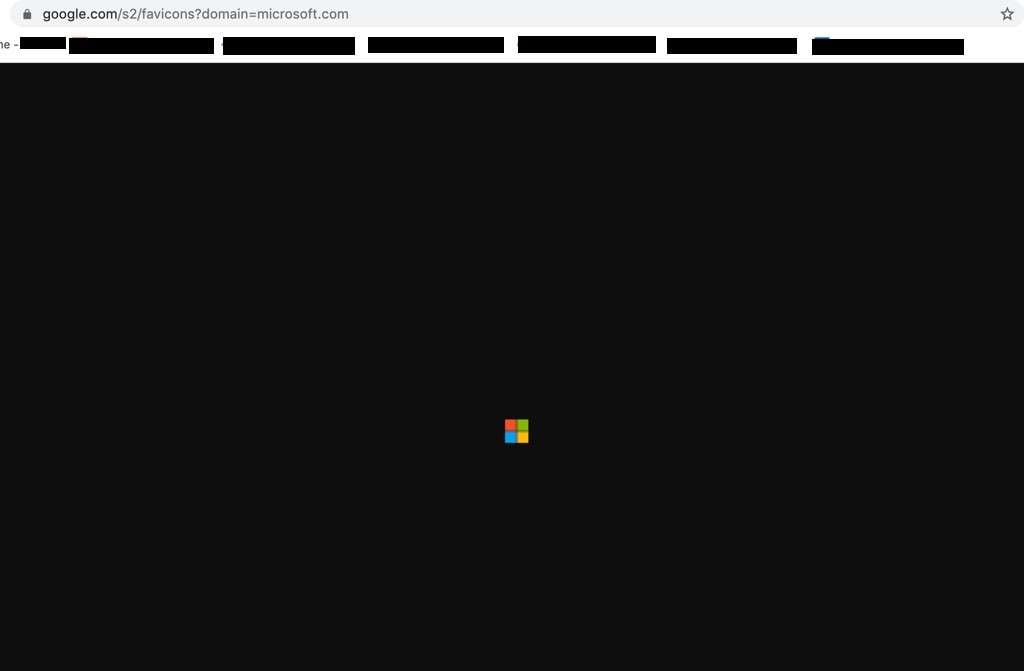

The technique exploits a free Google service. Google Shared Stuff, a social booking marketing service similar to de.lic.ious., launched in 2007 and later shut down. However, the Google Shared Stuff (s2) URL still functions as an icon generator.

Adding the domain of any website at the end of a Google (s2) URL will automatically generate the brand’s favicon, which can be downloaded and added to phishing pages.

Hackers have improved upon this method by appending the phishing victim’s email address to the end of the URL. This technique dynamically generates the favicon based on the domain in the victim’s email address, automating the process of impersonating a targeted business.

Phishing pages are often distinguishable from legitimate websites because they lack favicons and other quality images and have long, complicated URLs. This new favicon technique presents hackers with the ability to add visual authenticity to their phishing pages that could fool the average user who is not looking for the other signs of phishing.

The emergence of image manipulation in phishing

This new threat is validation that hackers are increasingly relying on image manipulation in phishing attacks. In addition to favicons, brand logos, QR codes, and other images can be distorted to the point that they are no longer recognizable to email filters yet appear normal to the naked eye.

If an email filter blacklists a phishing email and then a hacker manipulates the image in the email and tries again, the filter may not recognize it as the blacklisted email. It will look like nothing it’s ever seen.

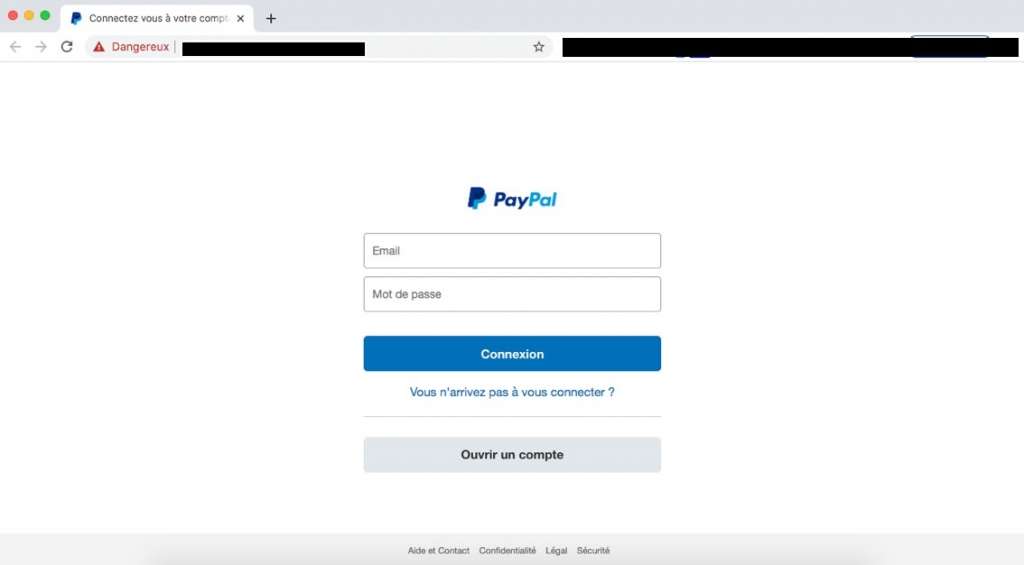

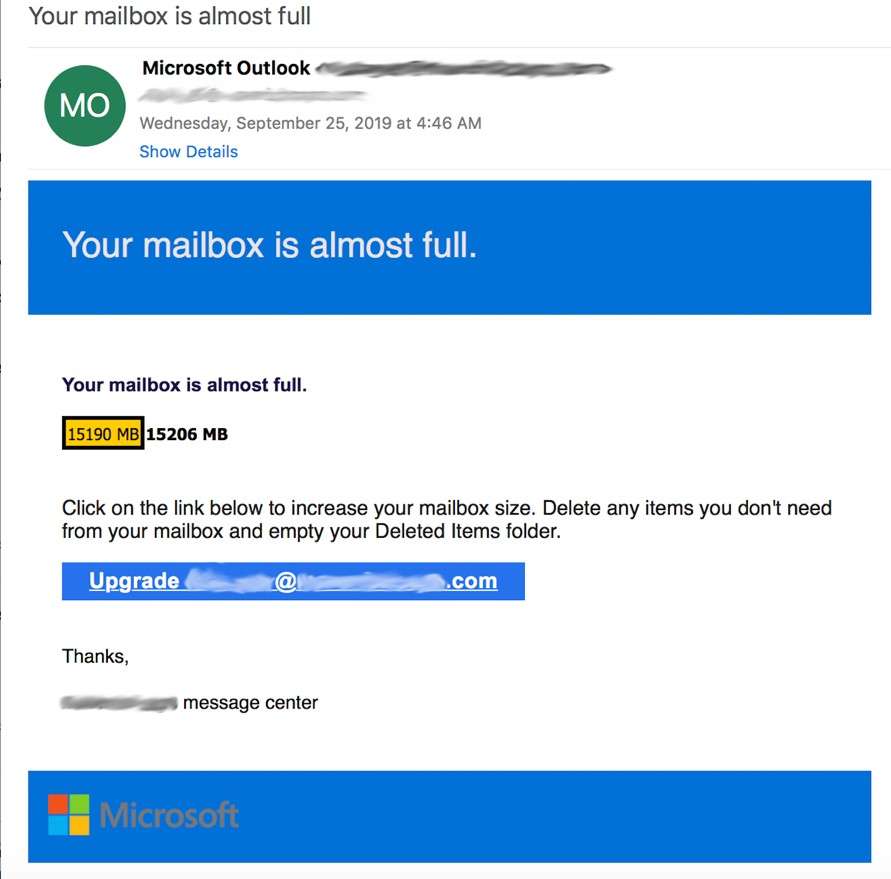

In the below [Office 365 phishing attack], the hacker placed a gray Microsoft logo on a blue background. This changes the image’s cryptographic hash, which is a type of fingerprint that a filter can recognize if it has seen it before.

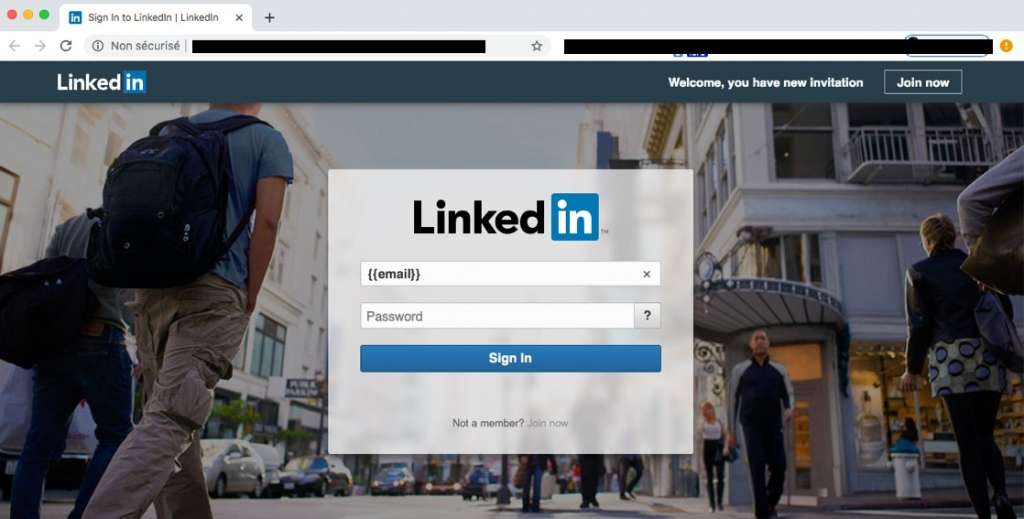

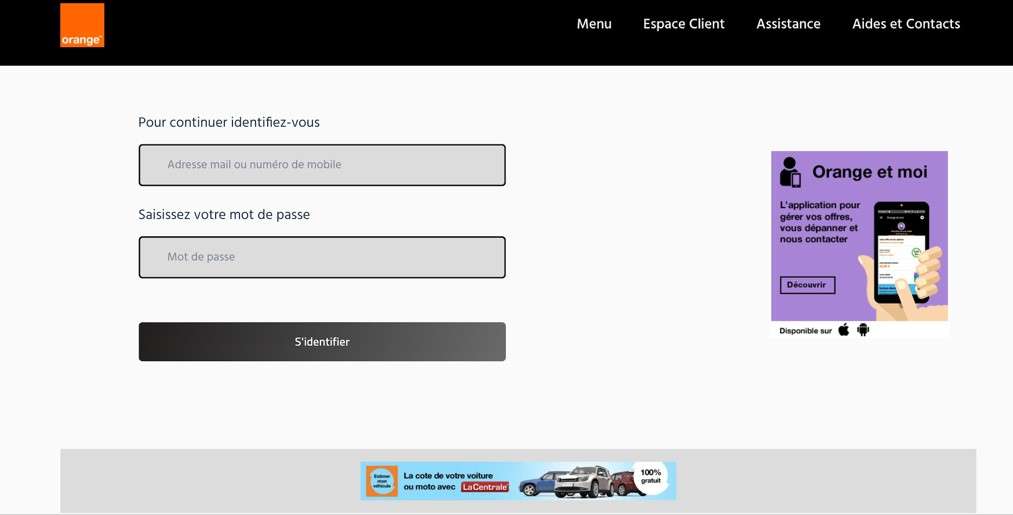

In the below example, the hacker has blurred the brand logo just enough to bypass an email filter. This subtle distortion would likely not make the average user suspicious of the webpage.

Email filters relying on fingerprint scanning technology to identify phishing emails cannot recognize image distortion. While some filters use Computer Vision algorithms with template matching technology, they do not recognize subtle changes to geometry and color and likely would not recognize the above threats.

Identifying and blocking phishing pages

Computer Vision models based on Convolutional Neural Network (CNN) are more sophisticated than models with template matching algorithms. Trained to recognize unexpected configurations and view images as humans do, this type of model is more resilient to small changes in images and will identify images that have been distorted.

This latest advancement in image detection is relatively new in anti-phishing technology but has proven to be highly effective at recognizing brand images and logos in phishing emails and webpages.

Finally, in those cases where a phishing email does bypass a filter, your users need phishing awareness training to recognize the signs. Glancing at a browser tab and seeing a brand’s favicon might make the page look legitimate, but users should consider other elements of the page. Although many phishing pages are extremely sophisticated and are exact replicas of legitimate webpages, they typically have long, complicated URLs with special characters and country codes.